v. 1.1 (rev. 2)

Installation Guide

30 April 2005

v. 1.1 (rev. 2)

Installation Guide

30 April 2005

Copyright © Members of the EGEE Collaboration. 2004.

See http://euegee.org/partners for details on the copyright holders.

EGEE (“Enabling Grids for Escience in Europe”) is a project

funded by the European Union. For more information on the project, its partners

and contributors please see http://www.eu-egee.org. You are permitted to copy

and distribute verbatim copies of this document containing this copyright

notice, but modifying this document is not allowed. You are permitted to copy

this document in whole or in part into other documents if you attach the

following reference to the copied elements:

“Copyright © 2004. Members of the EGEE Collaboration. http://www.euegee.org”

The information contained in this document represents the views of EGEE as of the date they are published. EGEE does not guarantee that any information contained herein is errorfree, or up to date.

EGEE MAKES NO WARRANTIES, EXPRESS, IMPLIED, OR STATUTORY, BY PUBLISHING THIS

DOCUMENT.

Table of Content

2.2. Standard Deployment Model

3. GLITE Packages AND doWNLOADS

4. The gLite Configuration Model

4.1. The gLite Configuration Scripts

4.2. The gLite Configuration Files

4.2.1. Configuration Parameters Scope

4.2.2. The Local Service Configuration Files

4.2.3. The Global Configuration File

4.2.4. The Site Configuration File

4.2.7. Default Environment Variables

4.2.8. Configuration Overrides

5.2. Installation Pre-requisites

5.3. Security Utilities Installation

5.4. Security Utilities Configuration

5.5. Security Utilities Configuration Walkthrough

6. Information and Monitoring System (r-gma)

6.2.1. R-GMA Deployment strategy

6.2.2. R-GMA Server deployment module

6.2.3. R-GMA Client deployment module

6.2.4. R-GMA servicetool deployment module

6.2.5. R-GMA GadgetIN (GIN) deployment module

7. VOMS Server and Administration Tools

7.2. Installation Pre-requisites

7.4. VOMS Server Configuration

7.5. VOMS Server Configuration Walkthrough

7.6. VOMS Administrators Registration

8.2. Installation Pre-requisites

8.3. WORKLOAD MANAGER SYSTEM Installation

8.4. WORKLOAD MANAGEMENT SYSTEM Configuration

8.5. WORKLOAD MANAGEMENT SYSTEM Configuration Walkthrough

8.6. Managing the WMS Services

8.7. Starting the WMS Services at Boot

8.8. Publishing WMS Services to R-GMA

9. Logging and Bookkeeping Server

9.2. Installation Pre-requisites

9.4. Logging and Bookkeeping Server Installation

9.5. Logging and Bookeeping Server Configuration

9.6. Logging and Bookkeeping Configuration Walkthrough

10. The torque Resource Manager

10.1.1. TORQUE Server Overview

10.1.2. TORQUE Client Overview

10.2. Installation Pre-requisites

10.3.1. TORQUE Server Installation

10.3.2. TORQUE Server Service Configuration

12.1.1. TORQUE Server Configuration Walkthrough

12.1.2. Managing the TORQUE Server Service

12.2.1. TORQUE Client Installation

12.2.2. TORQUE Client Configuration

12.2.3. TORQUE Client Configuration Walkthrough

12.2.4. Managing the TORQUE Client

13.2. Installation Pre-requisites

13.2.3. Resource Management System

13.3. Computing Element Service Installation

13.4. Computing Element Service Configuration

13.5. Computing Element Configuration Walkthrough

13.6. Managing the CE Services

13.7. Starting the CE Services at Boot

13.8. Workspace Service Tech-Preview

14.2. Installation Pre-requisites

14.2.3. Resource Management System

14.3. Worker Node Installation

14.4. Worker Node Configuration

14.5. Worker Node Configuration Walkthrough

15.2. Installation Pre-requisites

15.3. Single Catalog Installation

15.4. Single Catalog Configuration

15.5. Single Catalog Configuration Walkthrough

15.6. Publishing Catalog Services to R-GMA

16. FILE Transfer Service ORACLE

16.2. Installation Pre-requisites

16.2.4. Oracle Database Configuration

16.3. FILE Transfer Service Installation

16.4. FILE Transfer Service ORACLE Configuration

16.5. FILE Transfer Service ORACLE Configuration Walkthrough

16.6. Publishing FILE TRANSFER Services to R-GMA

17.2. Installation Pre-requisites

17.3. Metadata Catalog Installation

17.4. Metadata Catalog Configuration

17.5. Metadata Catalog Configuration Walkthrough

18.1.2. Installation pre-requisites

18.1.3. gLite I/O Server installation

18.1.4. gLite I/O Server Configuration

18.1.5. gLite I/O Server Configuration Walkthrough

18.2.2. Installation pre-requisites

18.2.3. gLite I/O Client installation

18.2.4. gLite I/O Client Configuration

19.2. Installation Pre-requisites

19.5. UI Configuration Walkthrough

19.6. Configuration for the UI users

20. The gLite Functional Test Suites

20.2.1. Test suite description

20.2.2. Installation Pre-requisites

20.3.1. Test suite description

20.3.2. Installation Pre-requisites

20.4.1. Test suite description

20.4.2. Installation Pre-requisites

20.5.1. Test suite description

20.5.2. Installation Pre-requisites

21. Appendix A: Service Configuration File Example.

22. Appendix B: Site Configuration File Example

This document describes how to install and configure the EGEE middleware known as gLite. The objective is to provide clear instructions for administrators on how to deploy gLite components on machines at their site.

Glossary

|

CE |

Computing Element |

|

R-GMA |

Relational Grid Monitoring Architecture |

|

WMS |

Workload Management System |

|

WN |

Worker Node |

|

FTS |

File Transfer Service |

|

LB |

Logging and Bookkeping |

|

SC |

Single Catalog |

|

UI |

User Interface |

|

VOMS |

Virtual Organization Management Service |

Definitions

|

Service |

A single high-level unit of functionality |

|

Node |

A computer where one or more services are deployed |

The gLite middleware is a Service Oriented Grid middleware providing services for managing distributed computing and storage resources and the required security, auditing and information services.

The gLite system is composed of a number of high level services that can be installed on individual dedicated computers (nodes) or combined in various ways to satisfy site requirements. This installation guide follows a standard deployment model whereby most of the services are installed on dedicated computers. However, other examples of valid node configuration are also shown.

The following high-level services are part of this release of the gLite middleware:

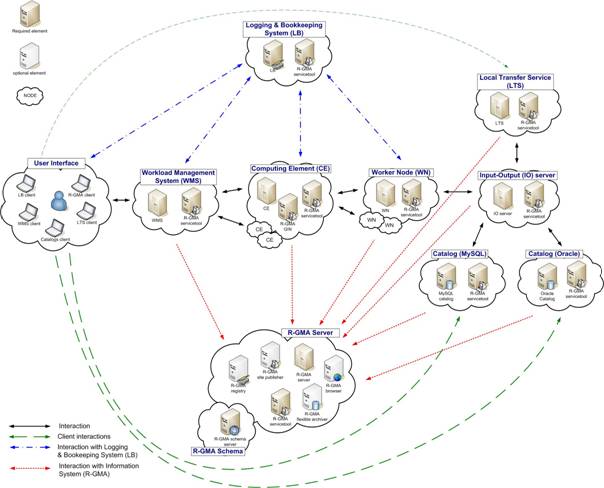

Figure 1: gLite Service Deployment Scenario shows the standard deployment model for these services.

Each site has to provide the local services for job and data management as well as information and monitoring:

Figure 1: gLite Service Deployment Scenario

The figure shows the proposed mapping of services onto physical machines. This mapping will give the best performance and service resilience. Smaller sites may however consider mapping multiple services onto the same machine. This is in particular true for the CE and package manager and for the SC and the LTS.

Instead of the distributed deployment of the catalogs (a local catalog and a global catalog) a centralized deployment of just a global catalog can be considered as well. This is actually the configuration supported in the gLite 1.1.

The VO services act on the Grid level and comprise the Security services, Workload Management services, Information and Monitoring services. Each VO should have an instance of these services, physical service instances can mostly be shared among VOs. For some services, even multiple instances per VO can be provided as indicated below:

· Security services

o The Virtual Organization Membership Service (VOMS) is used for managing the membership and member rights within a VO. VOMS also acts as attribute authority.

o myProxy is used as secure proxy store

· Workload Management services

o The Workload Management Service (WMS) is used to submit jobs to the Grid.

o The Logging and Bookkeeping service (LB) keeps track of the job status information.

The WMS and the LB can be deployed independently but due to their tight interactions it is recommended to deploy them together. Multiple instances of these services may be provided for a VO.

· Information and Monitoring services

o The R-GMA Registry Servers and Schema Server are used for binding information consumers and producers. There can be more than one Registry Server that can be replicated for resilience reasons.

· Single Catalog (SC)

o The single catalog is used for browsing the LFN space and to find out the location (sites) where files are stored. This is in particular need by the WMS.

· User Interface

o The User Interface (UI) combines all the clients that allow the user to directly interact with the Grid services.

In the rest of this guide, installation instructions for the individual modules are presented. The order of chapters represents the suggested installation order for setting up a gLite grid.

The gLite middleware is currently published in the form of RPM packages and installation scripts from the gLite web site at:

../../../../../../glite-web/egee/packages

Required external dependencies in RPM format can also be obtained from the gLite project web site at:

../../../../../../glite-web/egee/packages/externals/bin/rhel30/RPMS

Deployment modules for each high-level gLite component are provided on the web site and are a straightforward way of downloading and installing all the RPMs for a given component. A configuration script is provided with each module to configure, deploy and start the service or services in each high-level module.

Installation and configuration of the gLite services are kept well separated. Therefore the RPMS required to install each service or node can be deployed on the target computers in any suitable way. The use of dedicated RPMS management tools is actually recommended for production environments. Once the RPMS are installed, it is possible to run the configuration scripts to initialize the environment and the services.

gLite is also distributed using the APT package manager. More details on the apt cache address and the required list entries can be found on the main packages page of the gLite web site (../../../../../../glite-web/egee/packages/APT.asp).

gLite is also available in the form of source and binary tarballs from the gLite web site and from the EGEE CVS server at:

jra1mw.cvs.cern.ch:/cvs/jra1mw

The server support authenticated ssh protocol 1 and Kerberos 4 access and anonymous pserver access (username: anonymous).

Each gLite deployment module contains a number of RPMS for the necessary internal and external components that make up a service or node (RPMS that are normally part of standard Linux distributions are not included in the gLite installer scripts). In addition, each module contains one or more configuration RPMS providing configuration scripts and files.

Each module contains at least the following configuration RPMS:

|

Name |

Definition |

|

glite-config-x.y.z-r.noarch.rpm |

The glite-config RPM contains the global configuration files and scripts required by all gLite modules |

|

glite-<service>-config-x.y.z-r.noarch.rpm |

The glite-<service>-config RPM contains the configuration files and scripts required by a particular service, such as ce, wms or rgma |

In addition, a mechanism to load remote configuration files from URLs is provided. Refer to the Site Configuration section later in this chapter (§4.2.3).

All configuration scripts are installed in:

$GLITE_LOCATION/etc/config/scripts

where $GLITE_LOCATION is the root of the gLite packages installation. By default $GLITE_LOCATION = /opt/glite.

The scripts are written in python and follow a naming convention. Each file is called:

glite-<service>-config.py

where <service> is the name of the service they can configure.

In addition, the same scripts directory contains the gLite Installer library (gLiteInstallerLib.py) and a number of helper scripts used to configure various applications required by the gLite services (globus.py, mysql.py, tomcat.py, etc).

The gLite Installer library and the helper scripts are contained in the glite-config RPM. All service scripts are contained in the respective glite-<service>-config RPM.

All parameters in the gLite configuration files are categorised in one of three categories:

The gLite configuration files are XML-encoded files containing all the parameters required to configure the gLite services. The configuration files are distributed as templates and are installed in the $GLITE_LOCATION/etc/config/templates directory.

The configuration files follow a similar naming convention as the scripts. Each file is called:

glite-<service>.cfg.xml

Each gLite configuration file contains a global section called <parameters/> and may contain one or more <instance/> sections in case multiple instances of the same service or client can be configured and started on the same node (see the configuration file example in Appendix A). In case multiple instances can be defined for a service, the global <parameters/> section applies to all instances of the service or client, while the parameters in each <instance/> section are specific to particular named instance and can override the values in the <parameters/> section.

The configuration files support variable substitution. The values can be expressed in term of other configuration parameters or environment variables by using the ${} notation (for example ${GLITE_LOCATION}).

The templates directory can also contain additional service templates used by the configuration scripts during their execution (like for example the gLite I/O service templates).

Note: When using a local configuration model, before running the configuration scripts the corresponding configuration files must be copied from the templates directory to $GLITE_LOCATION/etc/config and all the user-defined parameters must be correctly instantiated (refer also to the Configuration Parameters Scope paragraph later in this section). This is not necessary if using the site configuration model (see below)

The global configuration file glite-global.cfg.xml contains all parameters that have gLite-wide scope and are applicable to all gLite services. The parameters in this file are loaded first by the configuration scripts and cannot be overridden by individual service configuration files.

Currently the global configuration file defines the following parameters:

|

Parameter |

Default value |

Description |

|

User-defined Parameters |

||

|

site.config.url |

|

The URL of the Site Configuration file for this node. The values defined in the Site Configuration file are applied first and are be overriden by values specified in the local configuration files. Leave this parameter empty or remove it to use local configuration only. |

|

Advanced Parameters |

||

|

GLITE_LOCATION |

/opt/glite |

|

|

GLITE_LOCATION_VAR |

/var/glite |

|

|

GLITE_LOCATION_LOG |

/var/log/glite |

|

|

GLITE_LOCATION_TMP |

/tmp/glite |

|

|

GLOBUS_LOCATION |

/opt/globus |

Environment variable pointing to the Globus package. |

|

GPT_LOCATION |

/opt/gpt |

Environment variable pointing to the GPT package. |

|

JAVA_HOME |

/usr/java/j2sdk1.4.2_08 |

Environment variable pointing to the SUN Java JRE or J2SE package. |

|

CATALINA_HOME |

/var/lib/tomcat5 |

Environment variable pointing to the Jakarta Tomcat package |

|

host.certificate.file |

/etc/grid-security/hostcert.pem |

The host certificate (public key) file location |

|

host.key.file |

/etc/grid-security/hostkey.pem |

The host certificate (private key) file location |

|

ca.certificates.dir |

/etc/grid-security/certificates |

The location where CA certificates are stored |

|

user.certificate.path |

.certs |

The location of the user certificates relative to the user home directory |

|

host.gridmapfile |

/etc/grid-security/grid-mapfile |

Location of the grid mapfile |

|

host.gridmap.dir |

/etc/grid-security/gridmapdir |

The location of the account lease information for dynamic allocation |

|

|

|

|

|

System Parameters |

||

|

installer.export.filename |

/etc/glite/profile.d/glite_setenv.sh |

Full path of the script containing environment definitions This file is automatically generated by the configuration script. If it exists, the new values are appended |

|

tomcat.user.name |

tomcat4 |

Name of the user account used to run tomcat. |

|

tomcat.user.group |

tomcat4 |

Group of the user specified in the parameter ‘tomcat.user.name’ |

Table 1: Global Configuration Parameters

All gLite configuration scripts implement a mechanism to load configuration information from a remote URL. This mechanism can be used to configure the services from a central location for example to propagate site-wide configuration.

The URL of the configuration file can be specified as the site.config.url parameter in the global configuration file of each node or as a command-line parameter when launching a configuration script, for example:

glite-ce-config.py --siteconfig=http://server.domain.com/sitename/siteconfig.xml

In the latter case, the site configuration file is only used for running the configuration scripts once and all values are discarded afterwards. For normal operations it is necessary to specify the site configuration URL in the glite-gobal.cfg.xml file.

The site configuration file can contain a global section called <parameters/> and one <node/> section for each node to be remotely configured (see the configuration file example in Appendix B). Each <node/> section must be qualified with the host name of the target node, for example:

<node name=”lxb1428.cern.ch”>

…

</node>

where the host name must be the value of the $HOSTNAME environment variable on the node. The <parameters/> section contains parameters that apply to all nodes referencing the site configuration file.

The <node/> sections can contain the same parameters that are defined in the local configuration files. If more than one service is installed on a node, the corresponding <node/> section can contain a combination of all parameters of the individual configuration files. For example if a node runs both the WMS and the LB Server services, then the corresponding <node/> section in the site configuration file may contain a combination of the parameters contained in the local configuration files for the WMS and the LB Server modules.

If a user-defined parameter (see later in §4.2.1 the definition of parameters scope) is defined in the site configuration file, the same parameter doesn’t need to be defined in the local file (it can therefore keep the token value ‘changeme’ or be removed altogether). However, if a parameter is defined in the local configuration file, it override whatever value is specified in the site configuration file. If a site configuration file contains all necessary values to configure a node, it is not necessary to create the local configuration files. The only configuration file that must always be present locally in the /opt/glite/etc/config/ directory is the glite-global.cfg.xml file, since it contains the parameter that specify the URL of the site configuration file.

This mechanism allows distributing a site configuration for all nodes and at the same time gives the possibility of overriding some or all parameters locally in case of need.

New configuration information can be easily propagated simply by publishing a new configuration file and rerunning the service configuration scripts.

In addition, several different models are possible. Instead of having a single configuration file contains all parameters for all nodes, it’s possible for example to split the parameters in several file according to specific criteria and point different services to different files. For example is possible to put all parameters required to configure the Worker Nodes in one file and all parameters for the servers in a separate files, or have a separate file for each node and so on.

Several configuration files can also be managed as a single file by using the XML inclusion mechanism. Using this standard mechanism, it is possible to include by reference one or more files in a master file and point the gLite services configuration scripts to the master file. In order to use this mechanism, the <siteconfig> tag in the master file must be qualified with the XInclude namespace as follows:

<siteconfig xmlns:xi="http://www.w3.org/2001/XInclude">

The individual files can then be included using the tag:

<xi:include href="glite-xxx.cfg.xml" />

where the value of the href attribute is a file path relative to the location of the master file. The content of the referenced file is included “as-is” in the master document when it is downloaded from the web server. The gLite service gets a single XML file where all the <xi:include> tags are replaced with the content of the referenced files.

The configuration scripts and files described above represent the common configuration interfaces of all gLite services. However, since the gLite middleware is a combination of various old and new services, not all services can natively use the common configuration model. Many service come with their configuration files and formats. Extensive work is being done to make all services use the same model, but until the migration is completed, the common configuration files must be considered as the public configuration interfaces for the system. The configuration scripts do all the necessary work to map the parameters in the public configuration files to parameters in service specific configuration files. In addition, many of the internal configuration files are dynamically created or modified by the public configuration scripts.

The goal is to provide the users with a consistent set of files and scripts that will not change in the future even if the internal behaviour may change. It is therefore recommended whenever possible to use only the common configuration files and scripts and do not modify directly the internal service specific configuration files.

When any gLite configuration scripts is run, it creates or modifies a general configuration file called glite_setenv.sh (and glite_setenv.csh) in /etc/glite/profile.d (the location can be changed using a system-level parameter in the global configuration file).

This file contains all the environment definitions needed to run the gLite services. This file is automatically added to the .bashrc file of users under direct control of the middleware, such as service accounts and pool accounts. In addition, if needed the .bash_profile file of the accounts is modified to source the .bashrc file and to set BASH_ENV=.bashrc. The proper environment is therefore created every time an account logins in various ways (interactive, non-interactive or script).

Other users not under control of the middleware can manually source the glite_setenv.sh file as required.

In case a gLite service or client is installed using a non-privileged user (if foreseen by the service or client installation), the glite_setenv.sh file is created in $GLITE_LOCATION/etc/profile.d.

By default the gLite configuration files and scripts define the following environment variables:

|

GLITE_LOCATION |

/opt/glite |

|

GLITE_LOCATION_VAR |

/var/glite |

|

GLITE_LOCATION_LOG |

/var/log/glite |

|

GLITE_LOCATION_TMP |

/tpm/glite |

|

PATH |

/opt/glite/bin:/opt/glite/externals/bin:$PATH |

|

LD_LIBRARY_PATH |

/opt/glite/lib:/opt/glite/externals/lib:$LD_LIBRARY_PATH |

The first four variables can be modified in the global configuration file or exported manually before running the configuration scripts. If these variables are already defined in the environment they take priority on the values defined in the configuration files

It is possible to override the values of the parameters in the gLite configuration files by setting appropriate key/value pairs in the following files:

/etc/glite/glite.conf

~/.glite/glite.conf

The first file has system-wide scope, while the second has user-scope. These files are read by the configuration scripts before the common configuration files and their values take priority on the values defined in the common configuration files.

The gLite Security Utilities module contains the CA Certificates distributed by the EU Grid PMA. In addition, it contains a number of utilities scripts needed to create or update the local grid mapfile from a VOMS server and periodically update the CA Certificate Revocation Lists. This module is presented first, since it is used by almost all other modules. However, it is not normally installed manually by itself, but automatically as part of the other modules.

The CA Certificate are installed in the default directory

/etc/grid-security/certificates

This is not configurable at the moment. The installation script downloads the latest available version of the CA RPMS from the gLite software repository.

The glite-mkgridmap script is used to update the local grid mapfile and its configuration file glite-mkgridmap.conf are installed respectively in

$GLITE_LOCATION/sbin

and

$GLITE_LOCATION/etc

The script can be run manually (after customizing its configuration file). Running glite-mkgridmap doesn’t preserve the existing grid-mapfile. However, a wrapper script is provided in $GLITE_LOCATION/etc/config/scripts/mkgridmap.py to update the grid-mapfile preserving any additional entry in the file not downloaded by glite-mkgridmap.

The Security Utilities module configuration script also installs a crontab file in /etc/cron.d that executes the mkgridmap.py script every night at 02:00. The installation of this cron job and the execution of the mkgridmap.py script during the configuration are optional and can be enabled using a configuration parameter (see the configuration walkthrough for more information).

Some services need to run the mkgridmap.py script as part of their initial configuration (this is currently the case for example of the WMS). In this case the installation of the cron job and execution of the script at configuration must be enabled. This is indicated in each case in the appropriate chapter.

The fetch-crl script is used to update the CA Certificate Revocation Lists. This script is provided by the EU GridPMA organization. It is installed in:

/usr/bin

The Security Utilities module configuration script installs a crontab file in /etc/cron.d that executes the glite-fetch-crl every four hours. The CRLs are installed in the same directory as the CA certificates, /etc/grid-security/certificates. The module configuration file (glite-security-utils.cfg.xml) allows specifying an e-mail address to which the errors generated when running the cron job are sent.

These installation instructions are based on the RPMS distribution of gLite. It is also assumed that the target server platform is Red Hat Linux 3.0 or any binary compatible distribution, such as Scientific Linux or CentOS. Whenever a package needed by gLite is not distributed as part of gLite itself, it is assumed it can be found in the list of RPMS of the original OS distribution.

The gLite Security Utilities module is installed as follows:

|

Parameter |

Default value |

Description |

|

User-defined Parameters |

||

|

cron.mailto |

|

E-mail address to which the stderr of the installed cron jobs is sent |

|

Advanced Parameters |

||

|

glite.installer.verbose |

true |

Produce verbose output when running the script |

|

glite.installer.checkcerts |

true |

Activate a check for host certificates and stop the script if not available. The certificates are looked for in the location specified by the global parameters host.certificate.file and host.key.file |

|

fetch-crl.cron.tab |

00 */4 * * *

|

The cron tab to use for the fetch-crl cron job. |

|

install.fetch-crl.cron |

true |

Install the glite-fetch-crl cron job. Possible values are 'true' (install the cron job) or 'false' (do not install the cron job) |

|

install.mkgridmap.cron |

false |

Install the glite-mkgridmap cron job. Possible values are 'true' (install the cron job) or 'false' (do not install the cron job) |

|

System Parameters |

||

Table 2: Security Utilities Configuration Parameters

The Security Utilities configuration script performs the following steps:

The R-GMA (Relational Grid Monitoring Architecture) is the Information and Monitoring Service of gLite. It is based on the Grid Monitoring Architecture (GMA) from the Grid Global Forum (GGF), which is a simple Consumer-Producer model that models the information infrastructure of a Grid as a set of consumers (that request information), producers (that provide information) and a central registry which mediates the communication between producers and consumers. R-GMA offers a global view of the information as if each Virtual Organisation had one large relational database.

Producers contact the registry to announce their intention to publish data, and consumers contact the registry to identify producers, which can provide the data they require. The data itself passes directly from the producer to the consumer: it does not pass through the registry.

R-GMA adds a standard query language (a subset of SQL) to the GMA model, so consumers issue SQL queries and receive tuples (database rows) published by producers, in reply. R-GMA also ensures that all tuples carry a time-stamp, so that monitoring systems, which require time-sequenced data, are inherently supported.

The gLite R-GMA Server is normally the first module installed as part of a gLite grid, since all services require it to publish service information.

The R-GMA Server is divided into four components:

The client part of R-GMA contains the producer and consumers of information. There is one generic client and a set of four specialized clients to deal with a certain type of information:

Client

to make the data from the R-GMA site-publisher, servicetool and GIN constantly

available. By default the glue and service tables are archived, however this

can be configured.

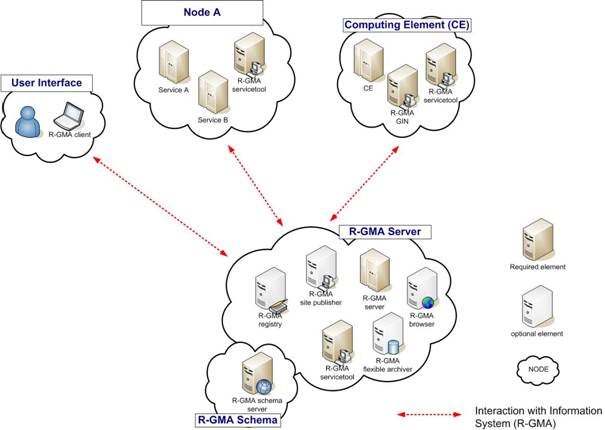

Figure 2 gives an overview of the R-GMA architecture and the

distribution of the different

R-GMA components.

Figure 2 R-GMA components

In order to facilitate the installation of the information system R-GMA, the different components of the server and clients have been combined into one R-GMA server deployment module and several client sub-deployment modules that are automatically installed together with the corresponding gLite deployment modules that use them. Table 3 gives a list of R-GMA deployment modules, their content and/or the list of gLite deployment modules that install/use them.

|

Deployment module |

Contains |

Used / included by |

|

R-GMA server |

R-GMA server R-GMA registry server R-GMA schema server R-GMA browser R-GMA site publisher R-GMA data archiver R-GMA servicetool |

|

|

R-GMA client |

RGMA client APIs |

User Interface (UI) Worker Node (WN) |

|

R-GMA servicetool |

R-GMA servicetool |

Computing Element (CE) File Transfer Service (Oracle) Data Single Catalog (MySQL) Data Single Catalog (Oracle) I/O-Server Logging & Bookkeeping (LB) R-GMA server Torque Server VOMS Server Workload Management System (WMS) |

|

R-GMA GIN |

R-GMA GadgetIN |

Computing Element (CE) |

Table 3: R-GMA deployment modules

In order to use the information system R-GMA, you first have to install the R-GMA server on one node. If you want, you can install further R-GMA servers on other nodes. The following rules have to be taken into account when installing a single or multiple servers and enabling/disabling the different options of the server(s):

Next, you can install the different services, e.g. the Computing Element. All necessary R-GMA components needed by a service are automatically downloaded and installed together with the service. You will only need to configure the corresponding parts of R-GMA by modifying the corresponding configuration files accordingly.

There is one common R-GMA configuration file (glite-rgma-common.cfg.xml)

that is used by all R-GMA components to handle common R-GMA settings and that

is shipped with each

R-GMA component. In addition, each R-GMA component comes with its own

configuration file (see the following sections for details).

The R-GMA server is the central server of the R-GMA service infrastructure. It contains the four R-GMA server parts – server, schema, registry and browser (see section 6.1.1) as well as the R-GMA clients – R-GMA servicetool, site publisher and data archiver (see section 6.1.2):

These installation instructions are based on the RPMS distribution of gLite. It is also assumed that the target server platform is Red Hat Linux 3.0 or any binary compatible distribution, such as Scientific Linux or CentOS. Whenever a package needed by gLite is not distributed as part of gLite itself, it is assumed it can be found in the list of RPMS of the original OS distribution.

The Java JRE or JDK are required to run the R-GMA Server. This release requires v. 1.4.2 revision 08. The JDK/JRE version to be used is a parameter in the gLite global configuration file. Please change it according to your version and location.

Due to license reasons, we cannot redistribute Java. Please download it from http://java.sun.com/ and install it if you have not yet installed it.

|

Parameter |

Default value |

Description |

|

User-defined parameters |

||

|

rgma.server.hostname |

|

Hostname of the R-GMA server. |

|

rgma.schema.hostname |

|

Host name of the R-GMA schema service. Example: lxb1420.cern.ch (See also configuration parameter ‘rgma.server.run_schema_service’ in the R-GMA server configuration file in case you install a server) |

|

rgma.registry.hostname |

|

Host name(s) of the R-GMA registry service. You must specify at least one and you can specify several if you want to use several registries. This is an array parameter. Example: lxb1420.cern.ch (See also configuration parameter ‘rgma.server.run_registry_service’ in the R-GMA server configuration file in case you install a server). |

|

Advanced Parameters |

||

|

System Parameters |

||

|

rgma.user.name |

rgma |

Name of the user account used to run the R-GMA gLite services. Example: rgma |

|

rgma.user.group |

rgma |

Group of the user specified in the parameter ‘rgma.user.name’. Example: rgma |

Table 4: R-GMA common configuration parameters

|

Parameter |

Default value |

Description |

|

User-defined Parameters |

||

|

rgma.server. |

|

MySQL root password. Example: verySecret |

|

rgma.server. |

|

Run a schema server by yourself (yes|no). If you want to run it on your machine set ‘yes’ and set the parameter ‘rgma.schema.hostname’ to the hostname of your machine otherwise set it to ‘no’ and set the ‘rgma.schema.hostname’ to the host name of the schema server you want to use. Example: yes |

|

rgma.server.

|

|

Run a registry server by yourself (yes|no). If you want to run it on your machine set ‘yes’ and add your hostname to the parameter list ‘rgma.registry.hostname’ otherwise set it to ‘no’. Example: yes |

|

rgma.server.

|

|

Run an R-GMA browser (yes|no). Running a browser is optional but useful. Example: yes |

|

rgma.server. |

|

Run the R-GMA data archiver (yes|no). Running an archiver makes the

data from the site-publisher, servicetool and GadgetIN constantly available.

If you turn on this option, by default the glue and service tables are archived.

To change the archiving behaviour, you have to create/change the archiver

configuration file and point the parameter ‘rgma.server. Example: yes |

|

rgma.server.run_site_publisher |

|

Run the R-GMA site-publisher (yes|no). Running the site-publisher publishes your site to R-GMA. Example: yes |

|

rgma.site-publisher.contact.system_administrator |

|

Contact email address of the site system administrator.Example: systemAdministrator@mysite.com |

|

rgma.site-publisher.contact.user_support |

|

Contact email address of the user support.

Example: userSupport@mysite.com |

|

rgma.site-publisher.contact.site_security |

|

Contact email address of the site security responsible. Example: security@mysite.com |

|

rgma.site-publisher.location.latitude |

|

Latitude of your site. Please go to 'http://www.multimap.com/' to find the correct value for your site. Example: 46.2341 |

|

rgma.site-publisher.location.longitude |

|

Longitude of your site. Please go to 'http://www.multimap.com/' to find the correct value for your site. Example: 6.0447 |

|

Advanced Parameters |

||

|

glite.installer.verbose |

true |

Enable verbose output. Example : true |

|

rgma.server.

|

1000 |

Maximum number of threads that are created for the tomcat http connector to process requests. This, in turn specifies the maximum number of concurrent requests that the connector can handle. Example: 1000 |

|

rgma.site-publisher.site-name |

${HOSTNAME} |

Hostname of the site. It has to be a DNS entry owned by the site and does not have to be shared with another site (i.e it uniquely identifies the site). It normally defaults to the DNS name of the R-GMA Server running the Site Publisher service Example: lxb1420.cern.ch |

|

rgma.server.archiver_configuration_file |

${GLITE_LOCATION}/etc/rgma-flexible-archiver/glue-config.props |

Configuration file

to be used to setup the flexible-archiver database to select which tables are supposed to be backuped. By

default, the glue and service

tables are selected. (See also parameter ‘rgma.server. Example: '/my/path/my_config_file.props' |

|

System Parameters |

||

|

rgma.server.

|

R-GMA |

Path under which R-GMA server should be deployed. Example: R-GMA |

|

rgma.server. |

R-GMA.war |

Name of war file for R-GMA server. Example: R-GMA.war |

Table 5: R-GMA server Configuration Parameters

The R-GMA configuration script performs the following steps:

1.

Reads the following environment variables if set in the environment or

in the global gLite configuration file $GLITE_LOCATION/etc/config/glite-global.csf.xml:

GLITE_LOCATION_VAR [default is /var/glite]

GLITE_LOCATION_LOG [default is /var/log/glite]

GLITE_LOCATION_TMP [default is /tmp/glite]

2.

Sets the following environment variables if not already set using the

values set in the global and R-GMA configuration files:

GLITE_LOCATION [=/opt/glite if not set anywhere]

CATALINA_HOME to the location specified in the global

configuration file

[default is

/var/lib/tomcat5/]

JAVA_HOME to the location specified in the

global configuration file

3. Configures the gLite Security Utilities module

4. Checks the directory structure of $GLITE_LOCATION.

5.

Load the R-GMA server configuration file

$GLITE_LOCATION/etc/config/glite-rgma-server.cfg.xml

and the R-GMA common configuration file

$GLITE_LOCATION/etc/config/glite-rgma-common.cfg.xml

and checks the configuration values.

6. Prepares the tomcat environment by:

a. setting the CATALINA_OPTS for the maximum java heap size ‘-Xmx’ to half the memory size of your machine.

b. setting the maximum number of threads for the http connector using the configuration value.

c. deploying the R-GMA server application by creating the corresponding context file in $CATALINA_HOME/conf/Catalina/localhost/XXX.xml where XXX is the deploy path name of the R-GMA server specified in the configuration file (the default is R-GMA).

7.

Configures the general R-GMA setup by running the R-GMA setup script

$GLITE_LOCATION/share/rgma/scripts/rgma-setup.py

using the configuration values from the configuration file for

server, schema and registry hostname.

8. Exports the environment variable RGMA_HOME to $GLITE_LOCATION

9. Starts the MySQL server.

10. Sets the MySQL root password using the configuration value. If the password is already set, the script verifies if the present password and the one specified by the configuration file are the same.

11. Configures

the R-GMA server by running the R-GMA server setup script

$GLITE_LOCATION/share/rgma/scripts/rgma-server-setup.py

using the option to run/not run a schema, registry and browser from

the configuration file.

12. Fills the MySQL DB with the R-GMA configuration.

13. Configures

the R-GMA server security by updating the file

$GLITE_LOCATION/etc/rgma-server/ServletAuthentication.props

with the location of the keys and cert files for tomcat.

14. If the site publisher or data archiver (flexible-archiver) are turned on in the configuration, the R-GMA client security is configured:

a. The hostkey and certificates are copied to the .cert subdirectory of the R-GMA user home directory.

b.

The security configuration file for the client

‘$GLITE_LOCATION/etc/rgma/ClientAuthentication.props

is updated with the location of the cert and key files.

15. If the site publisher is turned on in the configuration, the site publisher will be configured:

a.

The configuration file

$GLITE_LOCATION/etc/rgma-publish-site/site.props

is updated with the site name and the corresponding contact addresses.

16. If the data archiver (flexible-archiver) is turned on in the configuration, the flexible archiver is configured:

a.

The configuration file for the archiver properties, specified in the

configuration parameter ‘rgma.server.archiver_configuration_file’ is copied to

$GLITE_LOCATION/etc/rgma-flexible-archiver/flexy.props.

b.

The flexible-archiver database is set up via

$GLITE_LOCATION/bin/rgma-flexible-archiver-db-setup \

$GLITE_LOCATION/etc/rgma-flexible-archiver/flexy.props.

17. Starts the MySQL server.

18. Starts the tomcat server and gives time to go up to full speed before continuing

19. Starts the data archiver if enabled.

20. Starts the site publisher if enabled.

The R-GMA Client module is a set of client API in C, C++, Java and Python to access the information and monitoring functionality of the R-GMA system. The client is automatically installed as part of the User Interface and Worker Node.

These installation instructions are based on the RPMS distribution of gLite. It is also assumed that the target server platform is Red Hat Linux 3.0 or any binary compatible distribution, such as Scientific Linux or CentOS. Whenever a package needed by gLite is not distributed as part of gLite itself, it is assumed it can be found in the list of RPMS of the original OS distribution.

Install one or more Certificate Authorities certificates in /etc/grid-security/certificates. The complete list of CA certificates can be downloaded in RPMS format from the Grid Policy Management Authority web site (http://www.gridpma.org/). A special security module called glite-security-utils (gLite Security Utilities) is installed and configured automatically when installing and configuring the R-GMA Client (refer to Chapter 14 for more information about the Security Utilities module). The module contains the latest version of the CA certificates plus a number of certificate and security utilities. In particular this module installs the glite-fetch-crl, glite-mkgridmap and mkgridmap.py scripts and sets up cron jobs that periodically check for updated revocation lists and grid-mapfile entries if required).

The Java JRE or JDK are required to run the R-GMA client java API. This release requires v. 1.4.2 (revision 04 or greater). The JDK/JRE version to be used is a parameter in the configuration file. Please change it according to your version and location.

Due to license reasons, we cannot redistribute Java. Please download it from http://java.sun.com/ and install it if you have not yet installed it.

If you install the client as part of another deployment module (e.g. the UI), the R-GMA client is installed automatically and you can continue with the configuration description in the next section. Otherwise, the installation steps are:

|

set.proxy.path |

false |

If this parameter is true, the configuration script sets the GRID_PROXY_FILE and X509_USER_PROXY environment variables to the default value /tmp/x509up_u`id -u`. The parameter is set to false by default, since these environment variables are normally handled by other modules (like the gLite User Interface and the CE job wrapper on the Worker Nodes) and setting them here may create conflicts. It may be however necessary to let the R-GMA client set the variables for stand-alone use Example: false [type: 'boolean'] |

|

Parameter |

Default value |

Description |

|

User-defined Parameters |

||

|

Advanced Parameters |

||

|

glite.installer.verbose |

true |

Enable verbose output |

|

System Parameters |

||

|

false |

If this parameter is true, the configuration script sets the GRID_PROXY_FILE and X509_USER_PROXY environment variables to the default value /tmp/x509up_u`id -u`. The parameter is set to false by default, since these environment variables are normally handled by other modules (like the gLite User Interface and the CE job wrapper on the Worker Nodes) and setting them here may create conflicts. It may be however necessary to let the R-GMA client set the variables for stand-alone use Example: false [type: 'boolean'] |

|

Table 6: R-GMA Client Configuration Parameters

If you use the R-GMA

client as a sub-deployment module that is downloaded and used by another

deployment module, the configuration script is run automatically by the

configuration script of the other deployment module and you can skip the

following steps. Otherwise:

The R-GMA Client configuration script performs the following steps:

The R-GMA servicetool is an R-GMA client tool to publish information about the services it knows about and their current status. The tool is divided into three parts:

A daemon monitors regularly configuration files containing information about the services a site has installed. At regular intervals, this information is published to the ServiceTable. Each service specifies a script that needs to be run to obtain status information. The scripts are run by the daemon at the specified frequency and the results are inserted into the ServiceStatus table.

The second part of the tool is a command line program that modifies the configuration files to add delete and modify services. It does not communicate with the daemon directly but the next time the daemon scans the configuration file the changes will be published.

The third part of the tool is a command line program to query the service tables for status information.

This service is normally installed automatically with other modules and doesn’t need to be installed independently.

These installation instructions are based on the RPMS distribution of gLite. It is also assumed that the target server platform is Red Hat Linux 3.0 or any binary compatible distribution, such as Scientific Linux or CentOS. Whenever a package needed by gLite is not distributed as part of gLite itself, it is assumed it can be found in the list of RPMS of the original OS distribution.

Install one or more Certificate Authorities certificates in /etc/grid-security/certificates. The complete list of CA certificates can be downloaded in RPMS format from the Grid Policy Management Authority web site (http://www.gridpma.org/). A special security module called glite-security-utils (gLite Security Utilities) is installed and configured automatically when installing and configuring the R-GMA Servicetool (refer to Chapter 14 for more information about the Security Utilities module). The module contains the latest version of the CA certificates plus a number of certificate and security utilities. In particular this module installs the glite-fetch-crl, glite-mkgridmap and mkgridmap.py scripts and sets up cron jobs that periodically check for updated revocation lists and grid-mapfile entries if required).

The Java JRE or JDK are required to run the R-GMA servicetool. This release requires v. 1.4.2 (revision 04 or greater). The JDK/JRE version to be used is a parameter in the configuration file. Please change it according to your version and location.

Due to license reasons, we cannot redistribute Java. Please download it from http://java.sun.com/ and install it if you have not yet installed it.

If you install the servicetool as part of another deployment module (e.g. the Computing element), the R-GMA servicetool is installed automatically and you can continue with the configuration description in the next section. Otherwise, the installation steps are:

|

Parameter |

Default value |

Description |

|

User-defined Parameters |

||

|

rgma.servicetool.sitename

|

|

DNS name of the site for the published services (in general the hostname). Example: lxb2029.cern.ch |

|

Advanced Parameters |

||

|

glite.installer.verbose |

true |

Enable verbose output. Example : true |

|

rgma.servicetool.activate |

true |

Turn on/off servicetool for the node. Example: true] [Type: 'boolean'] |

|

System Parameters |

||

Table 7: R-GMA servicetool configuration parameters

|

Parameter |

Default value |

Description |

|

User-defined Parameters |

||

|

rgma.servicetool.enable |

true |

Publish this service via the R-GMA servicetool. If this varaiable set to false the other values below are not taken into account. Example: true |

|

rgma.servicetool.name |

|

Name of the service. This should be globally unique. Example: host.name.service_name |

|

rgma.servicetool.

|

|

URL to contact the service at. This should be unique for each service. Example: http://your.host.name:port/serviceGroup/ServiceName |

|

rgma.servicetool. |

|

The service type. This should be uniquely defined for each service type. Currently two methods of type naming are recommended: · The targetNamespace from the WSDL document followed by a space and then the service name · A URL owned by the body or individual who defines the service type Example: Name of service type |

|

rgma.servicetool. |

|

Service version in the form ‘major.minor.patch’ Example: 1.2.3 |

|

rgma.servicetool. |

|

How often to publish the service details (like endpoint, version etc). (Unit: seconds) Example: 600 |

|

rgma.servicetool. |

|

Script to run to determine the service status. This script should return an exit code of 0 to indicate the service is OK, other values should indicate an error. The first line of the standard output should be a brief message describing the service status (e.g. ‘Accepting connections’ Example: /opt/glite/bin/myService/serviceStatus |

|

rgma.servicetool. |

|

How often check and publish service status. (Unit: seconds) Example: 60 |

|

rgma.servicetool.url_wsdl |

|

URL of a WSDL document for the service (leave blank if the service has no WSDL). |

|

rgma.servicetool. |

|

URL of a document containing a detailed description of the service and how it should be used. Example: http://your.host.name/service/semantics.html |

|

Advanced Parameters |

||

|

System Parameters |

||

Table 8: R-GMA servicetool configuration parameters for

a service to be published via the R-GMA servicetool

The R-GMA configuration script performs the following steps:

1.

Set the following environment variables if not already set using the

values set in the global and R-GMA configuration files:

GLITE_LOCATION [=/opt/glite if not set anywhere]

JAVA_HOME to the location specified in the

global configuration file

2.

Read the following environment variables if set in the environment or in

the global gLite configuration file $GLITE_LOCATION/etc/config/glite-global.csf.xml:

GLITE_LOCATION_VAR [default is /var/glite]

GLITE_LOCATION_LOG [default is /var/log/glite]

GLITE_LOCATION_TMP [default is /tmp/glite]

3. Checks the directory structure of $GLITE_LOCATION.

4.

Configures the R-GMA servicetool by creating the servicetool

configuration file at

$GLITE_LOCATION/etc/rgma-servicetool/rgma-servicetool.conf

that specifies the sitename

5.

Takes the set of parameters for the R-GMA servicetool from each instance

in the service configuration files and for each of these instances, a

configuration file at

$GLITE_LOCATION/etc/rgma-servicetool/services/XXXX.service

is created, where XXXX is the name of the service.

6.

Starts the R-GMA servicetool via

/etc/init.d/rgma-servicetool start

The R-GMA GadgetIN (GIN) is an R-GMA client to extract information from MDS and to republish it to R-GMA. The R-GMA GadgetIN is installed and used by the Computing Element (CE) to publish its information and does not need to be installed independently.

These installation instructions are based on the RPMS distribution of gLite. It is also assumed that the target server platform is Red Hat Linux 3.0 or any binary compatible distribution, such as Scientific Linux or CentOS. Whenever a package needed by gLite is not distributed as part of gLite itself, it is assumed it can be found in the list of RPMS of the original OS distribution.

Install one or more Certificate Authorities certificates in /etc/grid-security/certificates. The complete list of CA certificates can be downloaded in RPMS format from the Grid Policy Management Authority web site (http://www.gridpma.org/). A special security module called glite-security-utils (gLite Security Utilities) is installed and configured automatically when installing and configuring the R-GMA Servicetool (refer to Chapter 14 for more information about the Security Utilities module). The module contains the latest version of the CA certificates plus a number of certificate and security utilities. In particular this module installs the glite-fetch-crl, glite-mkgridmap and mkgridmap.py scripts and sets up cron jobs that periodically check for updated revocation lists and grid-mapfile entries if required).

The Java JRE or JDK are required to run the R-GMA GadgetIN. This release requires v. 1.4.2 (revision 04 or greater). The JDK/JRE version to be used is a parameter in the configuration file. Please change it according to your version and location.

Due to license reasons, we cannot redistribute Java. Please download it from http://java.sun.com/ and install it if you have not yet installed it.

If you install the R-GMA GadgetIN as part of another deployment module (e.g. the Computing element), the R-GMA GadgetIN is installed automatically and you can continue with the configuration description in the next section. Otherwise, the installation steps are:

1. Download the latest version of the R-GMA GadgetIN installation script glite-rgma-gin_installer.sh from the gLite web site. It is recommended to download the script in a clean directory.

2.

Make the script executable

chmod u+x glite-rgma-gin _installer.sh

and execute it or execute it with

sh glite-rgma-gin _installer.sh

3. Run the script as root. All the required RPMS are downloaded from the gLite software repository in the directory glite-rgma-gin next to the installation script and the installation procedure is started. If some RPM is already installed, it is upgraded if necessary. Check the screen output for errors or warnings.

4.

If the installation is performed successfully, the following components

are installed:

gLite in /opt/glite

($GLITE_LOCATION)

gLite-essentials java in $GLITE_LOCATION/externals/share

5.

The gLite R-GMA gin configuration script is installed in

$GLITE_LOCATION/etc/config/scripts/glite-rgma-gin-config.py.

All the necessary template configuration files are installed into

$GLITE_LOCATION/etc/config/templates/

The next section will guide you through the different files and

necessary steps for the configuration.

If you use the R-GMA

GadgetIN as a sub-deployment module that is downloaded and used by another

deployment module (e.g. the CE), the configuration script is run automatically

by the configuration script of the other deployment module and you can skip

step 3. Otherwise:

|

Parameter |

Default value |

Description |

|

User-defined Parameters |

||

|

rgma.gin.run_generic_info_provider |

|

Run generic information provider (gip) backend (yes|no). Within LCG this comes with the ce and se Example: no |

|

rgma.gin.run_fmon_provider

|

|

Run fmon backend (yes|no). This is used by LCG for gridice. Example: no |

|

rgma.gin.run_ce_provider |

|

Run ce backend (yes|no). |

|

Advanced Parameters |

||

|

glite.installer.verbose |

true |

Enable verbose output. Example : true |

|

System Parameters |

||

Table 9: R-GMA GadgetIN configuration parameters

The R-GMA GadgetIN configuration script performs the following steps:

VOMS serves as a central repository for user authorization information, providing support for sorting users into a general group hierarchy, keeping track of their roles, etc. Its functionality may be compared to that of a Kerberos KDC server. The VOMS Admin service is a web application providing tools for administering member databases for VOMS, the Virtual Organization Membership Service.

VOMS Admin provides an intuitive web user interface for daily administration tasks and a SOAP interface for remote clients. (The entire functionality of the VOMS Admin service is accessible via the SOAP interface.) The Admin package includes a simple command-line SOAP client that is useful for automating frequently occuring batch operations, or simply to serve as an alternative to the fullblown web interface. It is also useful for bootstrapping the service.

These installation instructions are based on the RPMS distribution of gLite. It is also assumed that the target server platform is Red Hat Linux 3.0 or any binary compatible distribution, such as Scientific Linux or CentOS. Whenever a package needed by gLite is not distributed as part of gLite itself, it is assumed it can be found in the list of RPMS of the original OS distribution.

1. Install one or more Certificate Authorities certificates in /etc/grid-security/certificates. The complete list of CA certificates can be downloaded in RPMS format from the Grid Policy Management Authority web site (http://www.gridpma.org/). A special security module called glite-security-utils can be installed by downloading and running from the gLite web site (http://www.glite.org) the script glite-security-utils_installer.sh (Chapter 5). The module contains the latest version of the CA certificates plus a number of certificate and security utilities. In particular this module installs the glite-fetch-crl script and sets up a crontab that periodically check for updated revocation lists

2. Install the server host certificate hostcert.pem and key hostkey.pem in /etc/grid-security

The Java JRE or JDK are required to run the R-GMA Server. This release requires v. 1.4.2 (revision 04 or greater). The JDK/JRE version to be used is a parameter in the configuration file. Please change it according to your version and location.

Due to license reasons, we cannot redistribute Java. Please download it from http://java.sun.com/ and install it if you have not yet installed it.

1. Download from the gLite web site the latest version of the VOMS Server installation script glite-voms-server_install.sh. It is recommended to download the script in a clean directory

2. Make the script executable (chmod u+x glite-voms-server_installer.sh) and execute it

3. Run the script as root. All the required RPMS are downloaded from the gLite software repository in the directory glite-voms-server next to the installation script and the installation procedure is started. If some RPM is already installed, it is upgraded if necessary. Check the screen output for errors or warnings.

4.

If the installation is performed successfully, the following components

are installed:

gLite in /opt/glite

Tomcat in /var/lib/tomcat5

5. The gLite VOMS Server and VOMS Admnistration configuration script is installed in $GLITE_LOCATION/etc/config/scripts/glite-voms-server-config.py. A template configuration file is installed in $GLITE_LOCATION/etc/config/templates/glite-voms-server.cfg.xml

1. Copy the global configuration file template $GLITE_LOCATION/etc/config/template/glite-global.cfg.xml to $GLITE_LOCATION/etc/config, open it and modify the parameters if required (Table 1)

2. Copy the configuration file template from $GLITE_LOCATION/etc/config/templates/glite-voms-server.cfg.xml to $GLITE_LOCATION/etc/config/glite-voms-service.cfg.xml and modify the parameters values as necessary (Table 10)

3.

Some parameters have default values; others must be changed by the user.

All parameters that must be changed have a token value of changeme. Since

multiple instances of the VOMS Server can be installed on the same node

(one per VO), some of the parameters refer to individual instances. Each

instance is contained in a separate name <instance/> tag. A default

instance is already defined and can be directly configured. Additional instances

can be added by simply copying and pasting the <instance/> section,

assigning a name and changing the parameters values as desired.

The following parameters can be set:

|

Parameter |

Default value |

Description |

|

VO Instances parameters |

||

|

voms.vo.name |

|

Name of the VO associated with the VOMS instance |

|

voms.port.number |

|

Port number of the VOMS instance |

|

vo.admin.e-mail |

|

E-mail address of the VO admin |

|

vo.ca.URI |

|

URI from where the CRIs are downloaded |

|

User-defined Parameters |

||

|

voms.mysql.passwd |

|

Password (in clear) of the root user of the MySQL server used for VOMS databases |

|

Advanced Parameters |

||

|

glite.installer.verbose |

true |

Enable verbose output |

|

glite.installer.checkcerts |

true |

Enable check of host certificates |

|

voms-admin.install |

true |

Install the VOMS Admin interface on this server |

|

System Parameters |

||

Table 10: VOMS Configuration Parameters

4. As root run the VOMS Server configuration file $GLITE_LOCATION/etc/config/scripts/glite-voms-server-config.py

5. The VOMS Server is now ready.

The VOMS Server configuration script performs the following steps:

1.

Set the following environment variables if not already set using the

values defined in the global and lb configuration files:

GLITE_LOCATION [default is /opt/glite]

CATALINA_HOME [default is /var/lib/tomcat5]

2.

Read the following environment variables if set in the environment or in

the global gLite configuration file

$GLITE_LOCATION/etc/config/glite-global.cfg.xml:

GLITE_LOCATION_VAR

GLITE_LOCATION_LOG

GLITE_LOCATION_TMP

3. Load the VOMS Server configuration file $GLITE_LOCATION/etc/config/glite-voms-server.cfg.xml

4.

Set the following additional environment variables needed internally by

the services (this requirement should disappear in the future):

PATH=$GLITE_LOCATION/bin:$GLITE_LOCATION/externals/bin:$GLOBUS_LOCA

TION/bin:$PATH

LD_LIBRARY_PATH=$GLITE_LOCATION/lib:$GLITE_LOCATION/externals/lib

$GLOBUS_LOCATION/lib:$LD_LIBRARY_PATH

After the installation and configuration of the VOMS Server and Admin Tools, it is necessary to register at least one administrator for each registered VO running the following command on the VOMS server:

$GLITE_LOCATION/bin/vomsadmin --vo <VO name> create-user <certificate.pem> assign-role VO VO-Admin <certificate.pem>

where <VO name> is the name of the registered VO for which to register the administrator and <certificate.pem> is the path to the public certificate of the administrator. For more information, please refer to the VOMS Administrative Tools guide on the gLite web site.

The Workload Management System (WMS) comprises a set of grid middleware components responsible for the distribution and management of tasks across grid resources, in such a way that applications are conveniently, efficiently and effectively executed.

The core component of the Workload Management System is the Workload Manager (WM), whose purpose is to accept and satisfy requests for job management coming from its clients. For a computation job there are two main types of request: submission and cancellation.

In particular the meaning of the submission request is to pass the responsibility of the job to the WM. The WM will then pass the job to an appropriate Computing Element for execution, taking into account the requirements and the preferences expressed in the job description. The decision of which resource should be used is the outcome of a matchmaking process between submission requests and available resources.

These installation instructions are based on the RPMS distribution of gLite. It is also assumed that the target server platform is Red Hat Linux 3.0 or any binary compatible distribution, such as Scientific Linux or CentOS. Whenever a package needed by gLite is not distributed as part of gLite itself, it is assumed it can be found in the list of RPMS of the original OS distribution.

The Java JRE or JDK are required to run the R-GMA Servicetool service. This release requires v. 1.4.2 (revision 04 or greater). The JDK/JRE version to be used is a parameter in the configuration file. Please change it according to your version and location.

Due to license reasons, we cannot redistribute Java. Please download it from http://java.sun.com/ and install it if you have not yet installed it.

|

Parameter |

Default value |

Description |

|

User-defined Parameters |

||

|

glite.user.name

|

|

Name of the user account used to run the gLite services on this WMS node |

|

glite.user.group

|

|

Group of the user specified in the 'glite.user.name' parameter. This group must be different from the pool account group specified by the parameter ‘pool.account.group’. |

|

voms.voname |

|

The names of the VOs that this WMS node can serve (array parameter) |

|

voms.vomsnode |

|

The full hostname of the VOMS server responsible for each VO. Even if the same server is responsible for more than one VO, there must be exactly one entry for each VO listed in the 'voms.voname' parameter. Example: host.domain.org |

|

voms.vomsport |

|

The port on the VOMS server listening for request for each VO This is used in the vomses configuration file Example: 15000 |

|

voms.vomscertsubj |

|

The subject of the host certificate of the VOMS server for each VO. Example: /C=ORG/O=DOMAIN/OU=GRID/CN=host.domain.org |

|

pool.account.basename

|

|

The prefix of the set of pool account to be created. Existing pool accounts with this prefix are not recreated |

|

pool.account.group

|

|

The group name of the pool accounts to be used. This group must be different from the WMS service account group specified by the parameter ‘glite.user.group’. |

|

pool.account.number

|

|

The number of pool accounts to create. Each account will be created with a username of the form prefixXXX where prefix is the value of the pool.account.basename parameter. If matching pool accounts already exist, they are not recreated. The range of values for this parameter is 1-999 |

|

wms.cemon.port |

|

The port number on which this WMS server is listening for notifications from CEs when working in pull mode. Leave this parameter empty or comment it out if you don't want to activate pull mode for this WMS node. Example: 5120 |

|

wms.cemon.endpoints |

|

The endpoint(s) of the CE(s) that this WMS node should query when working in push mode. Leave this parameter empty or comment it out if you don't want to activate push mode for this WMS node. Example: 'http://lxb0001.cern.ch:8080/ce-monitor/services/CEMonitor' |

|

information.index.host

|

|

Host name of the Information Index node. Leave this parameter empty or comment it out if you don't want to use a BD-II for this WMS node |

|

cron.mailto |

|

E-mail address for sending cron job notifications |

|

condor.condoradmin

|

|

E-mail address of the condor administrator |

|

Advanced Parameters |

||

|

glite.installer.verbose |

true |

Sets the verbosity of the configuration script output |

|

glite.installer.checkcerts

|

true |

Switch on/off the checking of the existence of the host certificate files |

|

GSIWUFTPPORT

|

2811 |

Port where the globus ftp server is listening |

|

GSIWUFTPDLOG

|

${GLITE_LOCATION_LOG}/gsiwuftpd.log |

Location of the globus ftp server log file |

|

GLOBUS_FLAVOR_NAME

|

gcc32dbg |

The Globus libraries flavour to be used |

|

condor.scheddinterval |

10 |

Condor scheduling interval |

|

condor.releasedir |

/opt/condor-6.7.6 |

Condor installation directory |

|

CONDOR_CONFIG |

${condor.releasedir}/etc/condor_config |

Condor global configuration fil |

|

condor.blahpollinterval

|

10 |

How often should blahp poll for new jobs? |

|

information.index.port |

2170 |

Port number of the Information Index |

|

information,index.base_dn |

mds-vo-name=local, o=grid |

Base DN of the information index LDAP server |

|

wms.config.file |

${GLITE_LOCATION}/etc/glite_wms.conf |

Location of the wms configuration file |

|

System Parameters |

||

|

condor.localdir |

/var/local/condor |

Condor local directory |

|

condor.daemonlist |

MASTER, SCHEDD, COLLECTOR, NEGOTIATOR |

List of the condor daemons to start. This must a comma-separated list of services as it would appear in the Condor configuration file |

Table 11: WMS Configuration Parameters

i. Local Logger

ii. Proxy Renewal Service

iii. Log Monitor Service

iv. Job Controller Service

v. Network Server

vi. Workload Manager

Again, you find the necessary steps described in section 6.2.4.6.

Note: Step 1,2

and 3 can also be performed by means of the remote site configuration file or a

combination of local and remote configuration files

The WMS configuration script performs the following steps:

The CE configuration script can be run with the following command-line parameters to manage the services:

|

glite-wms-config.py --start |

Starts all WMS services (or restart them if they are already running) |

|

glite-wms-config.py --stop |

Stops all WMS services |

|

glite-wms-config.py --status |

Verifies the status of all services. The exit code is 0 if all services are running, 1 in all other cases |

|

glite-wms-config.py --startservice=xxx |

Starts the WMS xxx subservice. xxx can be one of the following: condor = the Condor master and daemons ftpd = the Grid FTP daemon lm = the gLite WMS Logger Monitor daemon wm = the gLite WMS Workload Manager daemon ns = the gLite WMS Network Server daemon jc = the gLite WMS Job Controller daemon pr = the gLite WMS Proxy Renewal daemon lb = the gLite WMS Logging & Bookkeeping client |

|

glite-wms-config.py --stopservice=xxx |

Stops the WMS xxx subservice. xxx can be one of the following: condor = the Condor master and daemons ftpd = the Grid FTP daemon lm = the gLite WMS Logger Monitor daemon wm = the gLite WMS Workload Manager daemon ns = the gLite WMS Network Server daemon jc = the gLite WMS Job Controller daemon pr = the gLite WMS Proxy Renewal daemon lb = the gLite WMS Logging & Bookkeeping client |

When the WMS configuration script is run, it installs the gLite script in the /etc/inet.d directory and activates it to be run at boot. The gLite script runs the glite-ce-config.py --start command and makes sure that all necessary services are started in the correct order.

The WMS services are published to R-GMA using the R-GMA Servicetool service. The Servicetool service is automatically installed and configured when installing and configuring the WMS module. The WMS configuration file contains a separate configuration section (an <instance/>) for each WMS sub-service. The required values must be filled in the configuration file before running the configuration script.

For more details about the R-GMA Service Tool service refer to section 6.2.4 in this guide.

The Logging and Bookkeeping service (LB) tracks jobs in terms of events (important points of job life, e.g. submission, finding a matching CE, starting execution etc.) gathered from various WMS components as well as CEs (all those have to be instrumented with LB calls).

The events are passed to a physically close component of the LB infrastructure (locallogger) in order to avoid network problems. This component stores them in a local disk file and takes over the responsibility to deliver them further.

The destination of an event is one of Bookkeeping Servers (assigned statically to a job upon its submission). The server processes the incoming events to give a higher level view on the job states (e.g. Submitted, Running, Done) which also contain various recorded attributes (e.g. JDL, destination CE name, job exit code, etc.).

Retrieval of both job states and raw events is available via legacy (EDG) and WS querying interfaces.

Besides querying for the job state actively, the user may also register for receiving notifications on particular job state changes (e.g. when a job terminates). The notifications are delivered using an appropriate infrastructure. Within the EDG WMS, upon creation each job is assigned a unique, virtually non-recyclable job identifier (JobId) in an URL form.

The server part of the URL designates the bookkeeping server which gathers and provides information on the job for its whole life.

LB tracks jobs in terms of events (e.g. Transfer from a WMS component to another one, Run and Done when the jobs starts and stops execution). Each event type carries its specific attributes. The entire architecture is specialized for this purpose and is job-centric: any event is assigned to a unique Grid job. The events are gathered from various WMS components by the LB producer library, and passed on to the locallogger daemon, running physically close to avoid any sort of network problems.

The locallogger's task is storing the accepted event in a local disk file. Once it's done, confirmation is sent back and the logging library call returns, reporting success.